Flows provide a powerful way to process your data in bulk. In this article I provide a quick overview of how we can use Flows to help do some common data clean-up operations.

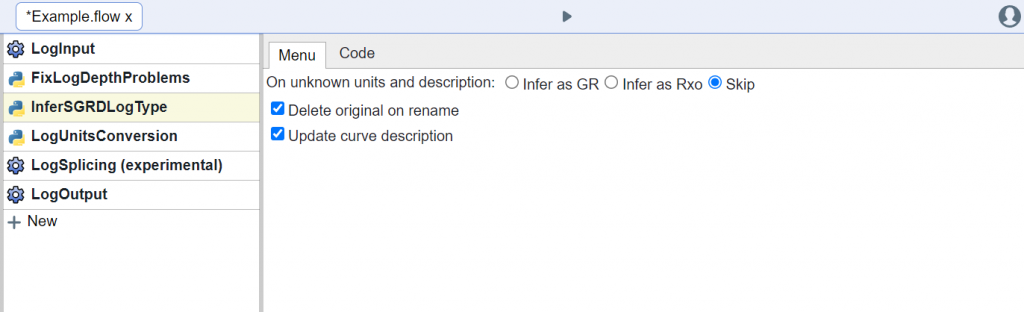

The Flow shown in the screenshot above performs the following operations:

- Brings the log data into the flow (Log Input tool)

- Fixes problems with log depth (Fix Log Depth Problems tool)

- Splits curves aliased to SGRD into either gamma ray or micro-resistivity curve types (Infer SGRD Log Type tool)

- Converts and renames units to the correct unit space (Log Units Conversion tool)

- Splices curves of the same type together (Log Splicing tool)

- Outputs the logs in a new database for future use (Log Output tool)

Log Depth Problems

In some wells the depth column can have a number of problems. For example it might have negative depths or inconsistent depth steps. Our Fix Log Depth Problems tool addresses some of these basic issues which can lead to unintended downstream consequences. Negative depth steps can cause issues with gradient calculations that assume you start at the surface. Inconsistent steps can cause issues when averaging or computing trends.

SGRD Mnemonic

The SGRD curve alias was split out in Danomics because there are curve name collisions with many of the mnemonics the contain “GRD”. These can either be gamma ray or micro-resistivity curves – and knowing the difference is important. We have tried to handle this in the CPI, but that solution can only go so far. That is why you should consider running the Infer SGRD Log Type flow tool. It will look at the curve units and description to infer the curve type and rename it as appropriate. This will allow you to make sure that you are using all the available data, while not mis-categorizing well logs.

Log Units Conversion

Log units are almost as varied as log mnemonics! For example, a neutron log can be reported in percent or decimal… but wait! It’s not that easy as the percent can be given in units like “%”, “PCT”, “PERCENT”, “PRCT”, “PU”, “P.U.”, and so on. And the same is true for decimal units. That’s why we have built up a table of common unit mnemonics that you can apply to make sure that you are getting all your curves in the right unit space.

Log Splicing

You often have the same types of logs from multiple runs or tools or digitization efforts that you’ll want to put together into a final curve. Danomics composites curves in the CPI – we don’t splice them. The distinction here is that the curves go in based on the priority in the alias table and are simply merged together. With the Log Splicing Flow tool we do the following:

- Identify a primary curve based on criteria such as log depth coverage, curve values, and units

- Identify other curves that meet filter criteria on curve values and units

- Evaluate overlap between curves

- (Optionally) Normalize curves based on overlapping values

- Splice the non-primary curves into the primary curves based on order of priority from curve scoring

Tips and Tricks

Remember that there are lots of Flow tools out there that can help you clean up your data. For example, you can trim the flat spots from the top base and middle of your wells with the Null Repeated Log Samples tool. Here are some tips to help you come up with truly differentiated log clean-up Flows:

- Flows can easily be re-used. Just right click and make a copy then adjust the inputs/outputs so it’s ready for your new project.

- Remember that you can run multiple tools of the same type in one Flow. For example, if you wanted to set different criteria for trimming repeated samples from the middle of a log than you do from the top and base, you can use two (or more) Null Repeated Log Sample tools for the same curve alias.

- After running a tool inspect the job log – we provide a summary of the job (logs in, logs out, and processing times). But in addition, many log tools have printouts to help you understand what operations were performed.

- Run the Log Health Check tool to get a list of common problems with your LAS files.

- Utilize the Mnemonics Analysis tool to ensure that you are capturing all the available aliases in your project.

- Python is available and can be leveraged to build custom, internal tools for identifying and repairing suspect data.

And of course, remember that we are here to help! If you have any questions, just reach out to us at support@danomics.com.